The Meaning of "The Birth of Bits"

It is a bit that makes it possible to divide everything into two choices of On / Off using light or electricity. Therefore, when expressing a bit, only two numbers of 0 and 1 are used and can be represented by binary method. The first introduction of the method of expressing 0 and 1 historically dates back to 1732 in the invention of punch cards developed by Basile Bouchon and Jean-Baptiste Falcon. This technology has been used by IBM and others for the development and application of early computers, and has been spotlighted as a technology that represents digital bits for a long time. In another form, Morse code, which first devised for wireless communication in 1844, is another important historical turning point.

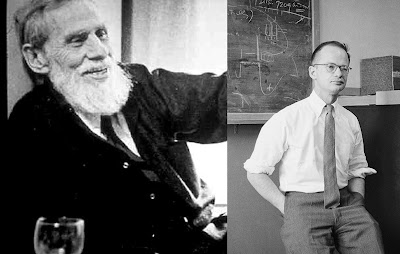

The first person to use the word "bit" is Claude E. Shannon. He first used the term in his thesis, "Mathematical Theory of Communication," published in 1948. On January 9, 1947, at Bell Labs, Shannon saw that John W. Tukey wrote a "binary digit" on his memo, Shannon wrote it down as "bit". However, he was also influenced by Alan Turing, a genius mathematician from the United Kingdom, to organize the concept of "bit". In 1943, Alan Turing visited Bell Labs on cryptography, where he occasionally shared lunch with Shannon to talk about the future of artificial machinery.

Shannon refined the notion of "information" in the process of finding the framework of the theory connecting complex concepts. Just as Newton gave a refined definition of ambiguous words such as mass, movement, force, and time.

Bits are small, abstract base particles consisting of binary numbers and flip-flops. Some scientists argue that "bits" may be more fundamental than the material itself. The bit is a piece that can not be broken any more, and the form of information is seen as the core of existence. In particular, John Archibald Wheeler, who studied quantum mechanics, made this claim stronger, saying that "It from Bit" means that the information leads to all beings. The reason he made this story is because the concept of "Bit" was very useful in the way he or she would understand the "paradox of the observer" in which the experimental results are influenced by observer. Observers do not only observe but ultimately ask questions or statements expressed in individual bits. In the end, the reality we know comes from the final analysis that raises the question "yes - no". Wheeler called this universe "participatory universe." In this case, the universe can be said to be a computer, a universal information processing machine. The idea that information could be the fundamental quantity at the core of physics was presented earlier by Frederick W. Kantor. Kantor's book "Information Mechanics" published in 1977 developed the idea in detail.

Bits are also important because today's mechanical, electrical and electronic devices can represent them very well. The switches 0 and 1 can be defined simply by a switch that determines whether electricity is on or off, light on / off, and voltage level. Therefore, various types of devices can express our world by using bits, thereby obtaining a totally new atom that can move at the speed of light without mass and volume.

Recently, the bit begins to deal with "the world of quantum mechanics". How do photons, electrons, and other particles interact? They exchange bits, transmit quantum states, and process information. Nowadays, this kind of approach called "digital physics" (also referred to as digital ontology or digital philosophy). It is a collection of theoretical perspectives based on the premise that the universe is describable by information.

Thinking about it, computers are only recently invented to store, process and deliver information. We know how to arrange, analyze, sort, combine, and sort information. However, the information was there with the birth of the universe. Modern information technology has greatly increased storage capacity and transmission speed. However, in the history of mankind, information technologies such as the birth of letters, printing, and encyclopedias have been continuously improved, and they have changed the history of mankind greatly. Therefore, the history of the "information" can be regarded as the Big History of the universe. Claude Shannon invented the term "bits", and we now understand the world totally different ways than before.

Comments

Post a Comment